AI doesn't have to be this bad part 1: Attribution, compensation, & fair use

Real impacts AI is having on society + optimistic thoughts for improvement

When I get into discussions with people about potential AI harms, I’ve noticed the dangers that captivate people most are the existential ones. Conversations inevitably drift towards things like the replaceability of human beings, the collapse of relationships and interpersonal skills, the creation of deadly bioweapons, and even the end of civilization.

I’m not trying to diminish these hot takes or say we shouldn’t discuss them, but sometimes dreaming too big about AI utopia or dystopia distracts us from the concrete societal problems it’s causing today.

There are very real ways to develop and deploy this technology more equitably, and exploring and discussing these alternatives is critical. We have agency, not the machines.

So welcome to my new series where I’ll share very tangible ways AI is negatively impacting our world, and a few optimistic thoughts on how to solve them.

Starting with…Attribution, compensation, and fair use

Attribution, compensation, and fair use

Text, image, and audio generating AI models are only able to create new content after being shown lots and lots of existing content. These models can only create those human-like outputs we’ve all experienced because they’re first trained on massive datasets created by humans.

For example, image generation models are only able to produce high quality images after they've been shown millions of existing images from artists and photographers.

Text generation models can only generate nuanced, fluent, descriptive text after first learning from the text written by humans in the form of books, scripts, articles, essays.

Right now, AI companies scrape this data, make copies of it, and use it to train their proprietary models. The people who created this content are not being compensated or attributed for their work, or even asked for their consent. As a result, more than 30 lawsuits have been filed against AI companies as of October 2024. Notably this list includes the New York Times, which is suing OpenAI and Microsoft, saying their AI models were “built by copying and using millions of The Times’s copyrighted news articles, in-depth investigations, opinion pieces, reviews, how-to guides”.

OpenAI maintains that scraping and training their models on this data is indeed fair use. However, they were absolutely furious when they found out DeepSeek was trained on outputs from OpenAI’s models, revealing that they support protections for the output of AI models, but not for the outputs from *actual* human beings.

It was a real foreshadowing of what it looks like to live in a society where humans serve AI instead of AI serving humans.

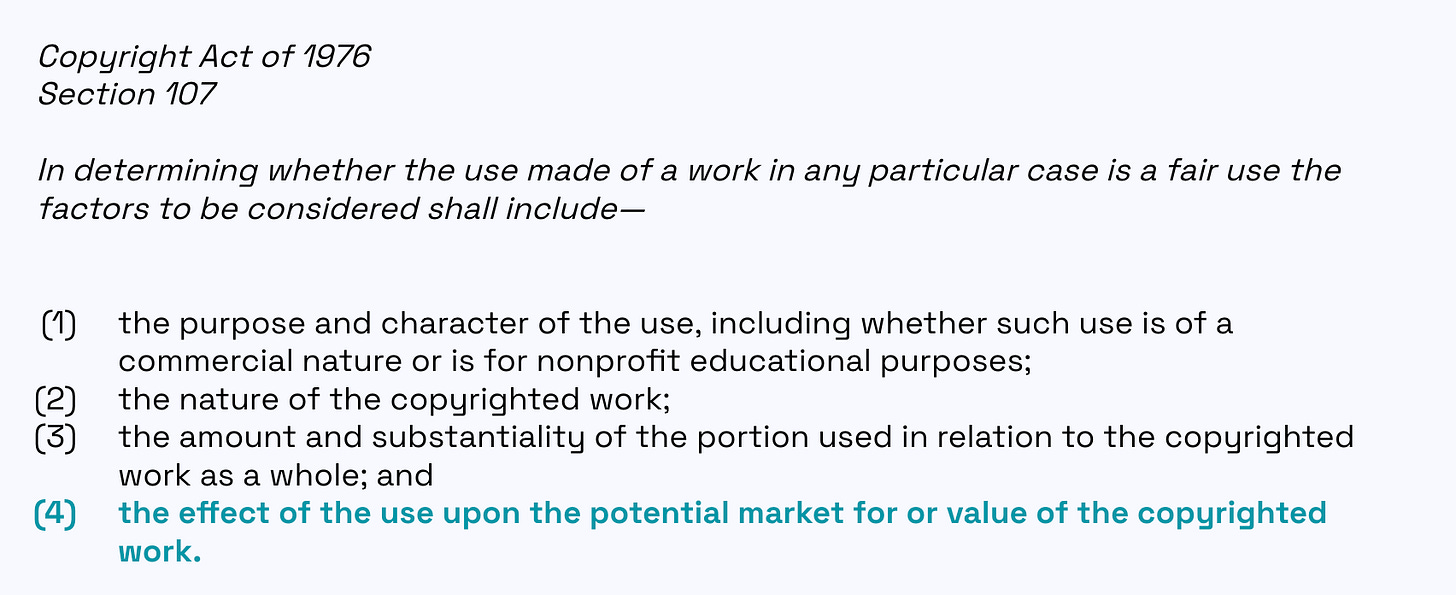

In the US specifically, the legality of using copyrighted material to train AI models largely depends on whether or not the purpose is considered Fair Use, which is defined in Section 107 of the Copyright Act of 1976. For the generative AI case, one of the main factors in making this determination is “the effect of the use upon the potential market for or value of the copyrighted work.” In other words, does the output of generative AI models impact the market value of books, articles, images, songs, etc.

Or to put it even more simply, does generative AI compete with its training data?

This is an important distinction and highlights how generative AI is different compared to things like Instagram filters, iPhone cameras, Photoshop, or other tools that made parts of the creative process more accessible.

Generative AI models need the data created by humans in order to generate decent outputs. Artists, writers, musicians, and creators made the data that fuels these models, and now they are having to compete directly with its outputs.

One of the least compelling arguments I’ve seen in favor of companies using this data without compensating the creators is comparing what AI does to what humans do. Humans take inspiration from paintings seen in museums, songs heard at concerts, articles read on Substack…

This argument drives me slightly crazy for a few key reasons:

AI scale ≠ human scale: Even though humans absorb creative inspiration from numerous sources, they can’t turn around and churn out nearly infinite pieces of content in the same way AI can. AI is a highly scalable competitor that no human could ever be. No graphic designer can make a million images in a day and no composer can generate 10 songs an hour. AI can.

Individual artists ≠ AI corporations: Artists and creators contribute back to the ecosystem they’ve draw inspiration from by paying for classes, mentoring others, participating in guilds and collectives, etc. The large corporations behind AI offer no meaningful contributions back to the ecosystems they have stolen data from.

Human learning ≠ AI training: Lastly, and I would say most critically, no matter how much anthropomorphic language we ascribe to machines, they are still just machines. There is no natural law that necessitates we treat AI a certain way because we also treat humans that way. The mechanisms behind human learning and AI training are not the same, and AI does not deserve the same protections we offer to human beings.

What does a better version of this look like?

License data. AI companies should only train their models on licensed data.

AI companies pay for compute and talent, and they should also pay for data. By paying for talent and not for data, we are, as a society, overvaluing the skills of a software engineer building an image generating service and devaluing the skills of an artist who created the images that the model was trained on. Both of these components are critical to creating high-quality AI models, require effort and skill, and we should treat them with equal respect. This is achievable if models are trained on data obtained via license agreements.

There are already companies training models on licensed data, and the non-profit Fairly Trained currently certifies AI models that are trained on copyrighted data obtained with the creator’s consent. They’ve already certified 10+ models, so next time you want to generate AI content take a look at the list to see if one fits your use case.

A lot of AI teams will claim paying for data is not financially viable (while they simultaneously shell out billions of dollars to build AI infrastructure). But to quote former OpenAI CTO Mira Murati who once claimed that if AI replaces a creative job maybe those jobs “shouldn’t have been there in the first place,” I think it’s fair to say that if your product can’t survive without stealing content created by millions of skilled artists and writers you never compensate, then you know, perhaps your business shouldn’t have been there in the first place.

Let’s compensate the creators who made this technology possible.

Feeling extra hyped up by all this injustice? Sign the Statement on AI Training.